PRESENTATION

This is a joint research project between two research groups in computer graphics, at University of Strasbourg (France) and at Karlsruhe Institute of Technology (Germany). The project is founded by the national agencies (ANR in France and DFG in Germany) and runs from 2020 to 2023. The main objective is to build a new workflow for efficient design, generation and rendering of procedural textures in large virtual scenes.

Context: visual content of digital worlds

Current virtual worlds are huge. See for example virtual film sets, cultural heritage visualization, or planet-sized landscapes explorers (like Google Earth). The management (i.e. creation, editing, storage, transfer, processing and rendering) of large amounts of 3D data is a serious issue in graphics applications. Even though the memory and power of graphics boards (GPU) increased a lot during the last decades, the amount of data produced by the various content production workflows is still growing much faster. The problem is that, in a classical production pipeline and end-user applications, virtual environments are pre-computed and explicitly stored as triangles and huge texture maps, the latter being of paramount importance, since textures are responsible for most of the displayed visual details. Due to enormous databases, this pipeline already requires to set up complex streaming technologies and virtual memory for both modeling and rendering. This drastically increases production costs, and hampers dissemination of data through classical storage media.

Tools: procedural textures and ray-tracing

Many modeling tools represent textures compactly during the production process using a content generative approach, also called procedural texturing. The textures are defined by a directed acyclic graph, the Procedural Texture Graph: source nodes are mathematical functions; inner nodes are pixel processing operations; sink nodes are the final output textures. The texture is generated by traversing the graph. We are then interested in rendering algorithms based on ray-tracing, taking into account the geometry of all objects, the textures, the lighting conditions and the virtual camera parameters.

Goal: a new workflow

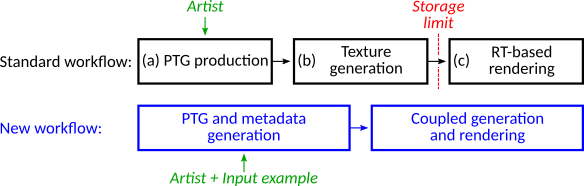

The standard current workflow consists in three successive stages. (a) The PTGs are designed by artists using specific softwares. (b) The output texture is computed and stored. (c) The 3D scene is rendered, resulting in the final image or video of the scene.

We propose a new workflow. The generation is tightly coupled with rendering: the graph is evaluated on demand, and controlled by the output rendering requests, so as to avoid pre-computation and storage. The graph is enhanced with metadata that encode how the virtual light rays interact with the texture graph, and that allow for an actual computation of the ray propagation. Novel algorithms help the artist to produce the procedural texture graph with metadata from an input example.